Running a growing e-commerce platform like Opszilla is thrilling. You’re processing thousands of orders daily across the US and Canada, scaling infrastructure, and expanding into new markets. But amidst all that momentum, something starts to break: your data infrastructure and database performance.

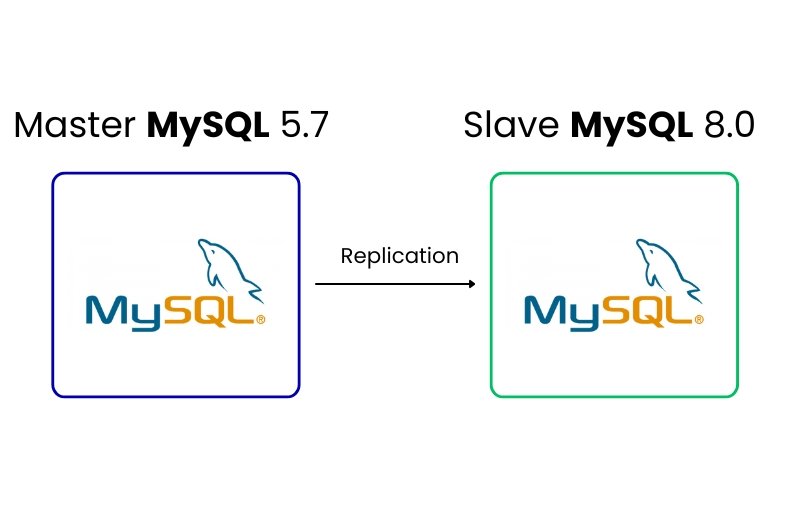

At first, it’s subtle—slower queries, lagging reports, a few scaling hiccups. Then the real issue surfaces: you’re still running on MySQL 5.7, a version nearing its end-of-life in October 2023.

Suddenly, you’re not just dealing with performance issues—you’re staring down a serious risk. No more updates. No more security patches. No future support. For a business built on real-time transactions, that’s not just inconvenient—it’s unacceptable.

This blog dives into a real-world scenario at Opszilla to unpack why upgrading to MySQL 8.0 isn’t just a technical decision—it’s a business-critical move. We’ll explore how Opszilla upgraded their MySQL 5.7 databases to MySQL 8.0.

This real-world case from OpsZilla highlights how effective data engineering services and database modernization can mitigate risk and enhance system performance

Why MySQL 8.0 Migration Matters in Modern Data Engineering

Migration Challenges (And How OpsZilla Solved Them)

Upgrading a live database while maintaining uptime, performance, and data integrity isn’t easy. OpsZilla faced a series of technical challenges—each of which had to be solved before the upgrade could be safely executed.

Zero Downtime Was Non-Negotiable

Even a few seconds of interruption could compromise transaction integrity, disrupt customer experience, or impact live analytics dashboards. The challenge was clear: migrate safely, quickly, and invisibly.

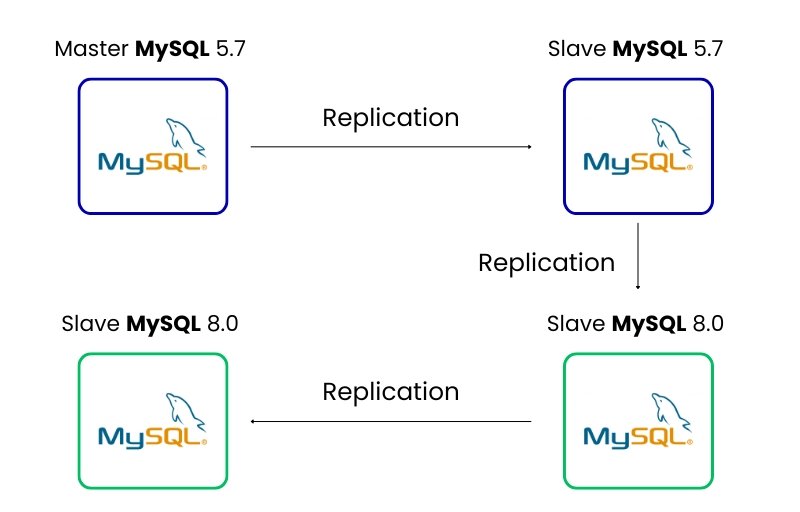

OpsZilla’s infrastructure spanned multiple environments. Some production clusters ran on a standalone MySQL server without replicas, while others had a primary node paired with one or more read replicas.

Each presented its own risks and required a tailored migration strategy:

- Scenario 1: Primary database only (no existing replica)

- Scenario 2: Primary with existing secondary replica

Scenario 1: Primary Database Only (No Existing Replica)

Challenge:

- A single MySQL 5.7 primary server with no replicas.

- Directly upgrading in-place risked downtime and performance bottlenecks.

- Large databases could cause mysqldump to strain the server if not optimized.

Solution: Minimizing Contention with Chunked Backups:

To avoid overloading the primary server during the backup process, we used a chunked mysqldump strategy with transaction consistency:

Take a Consistent Logical Backup:

- single-transaction ensures a consistent snapshot without locking tables.

- max_allowed_packet=500M prevents large transactions from failing.

Capture Binary Log Position for Replication Setup (If Needed):

- Record the File and Position to set up replication later.

Restore on MySQL 8.0 Instance:

Why This Worked:

- Avoided excessive load on the primary by controlling dump packet size.

- Ensured data consistency with transaction isolation.

- Enabled future replication setup if required.

This aligns with best practices in data engineering services for high-availability systems.

Scenario 2: Primary with Existing Secondary Replica

Challenge:

- A primary-replica setup where the primary handled live traffic.

- Running mysqldump directly on the primary could impact performance.

Solution: Offloading Dump to a Secondary Replica

Instead of burdening the primary, we used an existing (or temporary) replica to perform the backup:

Use a Read Replica for Backup:

- If no replica existed, we first set up a temporary one from the primary.

- Ensured the replica was in sync before proceeding.

Run mysqldump on the Replica:

No impact on primary server performance.

Restore to MySQL 8.0 & Reconfigure Replication:

- Import the dump into the new MySQL 8.0 primary.

- Set up new replicas under MySQL 8.0.

Why This Worked:

- Zero performance impact on the production primary.

- Allowed parallel testing of MySQL 8.0 before cutover.

- Minimized downtime by keeping the old primary operational during migration.

This approach reflects scalable data engineering practices essential for modern database upgrades.

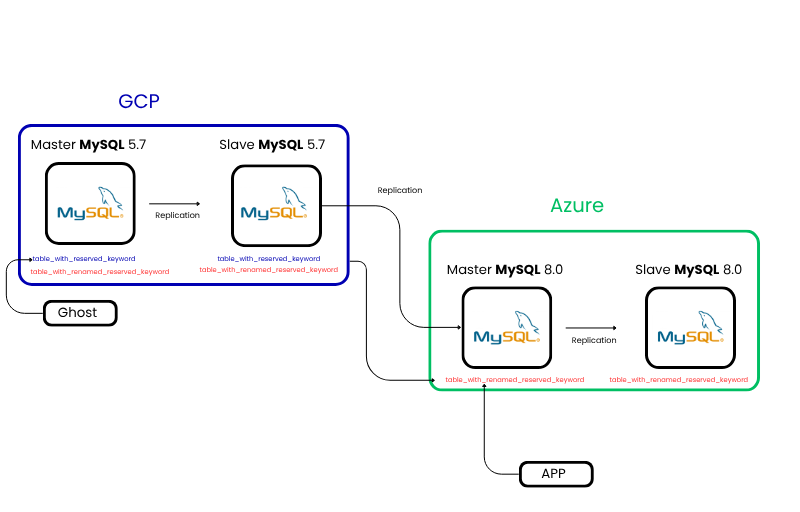

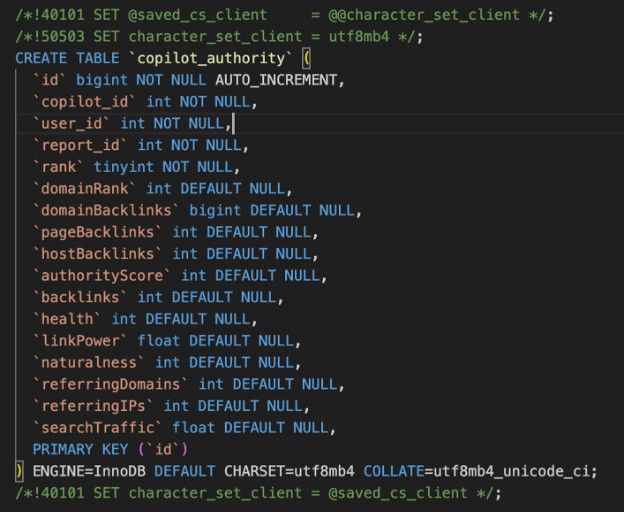

Reserved Keywords Broke Schema Imports

MySQL 8.0 introduced new reserved keywords such as RANK, SYSTEM, and WINDOW.

These words were not reserved in MySQL 5.7, so it was common to use them as column names without issues

In this scenario, the column rank was most commonly used in the following tables:

- copilot_authority

- analysis_citations

Both tables were tightly integrated with critical reporting pipelines and application services.

Analysis citations table:

The analysis_citations table stores external references or citations linked to analytical reports. The rank column is used to define the order or relevance of each citation.

Copilot authority: This table served as a recommendation engine to assign authority levels or trust scores to decisions, where rank was used to prioritize recommendations or rules.

Challenge:

- rank column broke queries due to MySQL 8.0 keyword conflict.

- Table was large and critical, so renaming risked downtime.

- Needed coordinated code changes across services using these tables.

`id` int(11) NOT NULL AUTO_INCREMENT,

`report_id` int(11) DEFAULT NULL,

`url` varchar(100) COLLATE utf8_unicode_ci DEFAULT NULL,

`rank` int(11) DEFAULT NULL,

`citation_link` varchar(2500) COLLATE utf8_unicode_ci DEFAULT NULL,

`citation_title` varchar(255) COLLATE utf8_unicode_ci DEFAULT NULL,

`url_host` varchar(150) COLLATE utf8_unicode_ci DEFAULT NULL,

`client_id` VARCHAR(255) CHARACTER SET utf8 COLLATE utf8mb3_unicode_ci NOT NULL,

`created_at` timestamp NULL DEFAULT CURRENT_TIMESTAMP,

`updated_at` TIMESTAMP DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`id`),

KEY `report id` (`report_id`),

KEY `url host` (`url_host`)

) ENGINE=InnoDB AUTO_INCREMENT=81731627 DEFAULT CHARSET=utf8 COLLATE=utf8_unicode_ci;

Solution: Safe Column Rename Using Online Schema Change Tools

Rename the Reserved Column

- Safely renames the rank column to user_rank without downtime using an online schema change.

Using GitHub gh-ost:

–database=opszilla_db \

–table=analysis_citations \

–user=root \

–password=your_password \

–host=your_db_host \

–execute

- Renames the rank column to user_rank online using GitHub’s gh-ost tool with zero downtime.

Why This Worked:

- Renamed the column safely with zero downtime.

- Avoided table locks and allowed live traffic during the change.

- Enabled safe rollback if anything went wrong.

- Fixed schema incompatibility and unblocked the MySQL 8.0 upgrade.

This is a proven technique in enterprise-grade data infrastructure upgrade

[Explore Our Case Study : AWS Cost Savings Through Database Optimization For Fintech]

Timestamp Columns Introduced Data Drift

The order_delivery table used for tracking every stage of an order’s delivery lifecycle—from shipment to final handoff. It included several TIMESTAMP columns with auto-update behaviors critical for real-time delivery tracking.

- Timestamp inaccuracy can confuse delivery status in customer-facing systems.

- Real-time dashboards use these timestamps for order tracking, delivery efficiency metrics, etc.

`delivery_id` int(11) NOT NULL AUTO_INCREMENT,

`order_id` int(11) NOT NULL,

`customer_id` int(11) NOT NULL,

`status` enum(‘PROCESSING’,’SHIPPED’,’IN_TRANSIT’,’OUT_FOR_DELIVERY’,’DELIVERED’) NOT NULL DEFAULT ‘PROCESSING’,

`order_date` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP,

`ship_date` timestamp NULL DEFAULT NULL,

`estimated_delivery` timestamp NULL DEFAULT NULL,

`actual_delivery` timestamp NULL DEFAULT NULL,

`carrier` varchar(20) DEFAULT NULL,

`tracking_number` varchar(50) DEFAULT NULL,

`last_updated` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP,

PRIMARY KEY (`delivery_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4;

Challenge:

- MySQL 8.0 broke auto-update TIMESTAMP behavior due to stricter rules.

- TIMESTAMP values were lost during dump/import without correct flags.

- Timezone mismatches between servers caused timestamp shifts.

- These issues risked breaking real-time order tracking and dashboards.

Solution: Preserve Temporal Column Integrity During Migration

Take a Consistent Logical Backup

Used mysqldump with the right flags to preserve timestamp values and behaviors

Restore the Dump to MySQL 8.0 Target:

Ensured all DEFAULT CURRENT_TIMESTAMP and ON UPDATE definitions were retained.Verified schema integrity using SHOW CREATE TABLE

Use Row-Based Binary Logging

Prevents subtle data drift that may occur in statement-based logging.

Why This Worked:

- Preserved all TIMESTAMP values and auto-update settings accurately.

- Prevented data drift with row-based binary logging.

- Avoided schema issues caused by MySQL 8.0’s stricter timestamp rules.

A critical example of applying database modernization in real-world production environments.

Collation Mismatches Broke Unicode Handling

We faced issues with the customer_reviews table, which had mixed character sets and deprecated collations due to years of inconsistent schema changes. This affected Unicode support and query performance.

Challenge:

- Emoji data got corrupted due to 3-byte utf8_unicode_ci (couldn’t store 4-byte emojis).

- Mixed collations triggered “Illegal mix of collations” query failures.

- latin1 columns broke Unicode rendering after migration.

Solution: Standardize to utf8mb4 and Modern Collation Pre-Migration

To avoid corruption and query errors, we normalized all text columns in MySQL 5.7 before migration

Pre-Migration: Convert Entire Table to utf8mb4

Validate Emojis and Character Accuracy

😊%’;

Why This Worked:

- Fixed latin1 legacy data without corrupting product names.

- Eliminated collation-related query errors.

- Saved all emojis and special characters correctly.

- Table is now fully compatible with MySQL 8.0 features.

What are Collation and Charset

| Collation is like the “rule book” for how a database sorts and compares text |

| Whether letters are treated as uppercase or lowercase (case sensitivity) |

| How accented characters (like é, ñ) are handled |

| The order in which characters are sorted |

To prevent data corruption and ensure full compatibility with MySQL 8.0, we standardized the character

set and collation before performing the migration.

Pre-Migration Character Set Conversion

Collation Compatibility Matrix:

| MySQL 5.7 Source | MySQL 8.0 Replica | Notes | Recommendation |

| utf8mb4 |

utf8mb4 |

Perfect | Ideal for new deployments |

| utf8 (utf8mb3) | utf8mb3 | Legacy | Temporary migration state |

| utf8mb3 |

utf8mb4 |

Conditional |

Requires slave_type_conversions=ALL_NON_LOSSY |

| utf8mb4 | utf8mb3 | Dangerous

|

Data loss guaranteed |

[ Don’t miss our latest eBook: Ultimate Guide to Delivering End-to-End Data Strategy ]

Disk Space Exhaustion During Dump and Restore

Migrate OpsZilla’s 1TB MySQL database without increasing disk capacity, due to budget and infrastructure constraints.

Challenge:

- mysqldump nearly doubled disk usage during export.

- MySQL 8.0 restore generated extra temp files and binary logs.

- Disk space exhausted mid-restore, causing crashes and risk of data loss.

- Uncontrolled binary log growth led to “Disk Full” errors.

- Leftover logs after migration increased storage costs.

Solution: Optimizing Storage with Compression and Log Management

Take a Compressed Logical Backup:

- Consistent snapshot without table locks

Stream Restore with On-the-Fly Decompression:

- Avoids writing a large uncompressed .sql file to disk.

Temporarily Disable Logs During Restore

SET GLOBAL slow_query_log = ‘OFF’;

- Reduces unnecessary disk writes and speeds up restore

Purge Binary Logs Aggressively

— OR

SET GLOBAL expire_logs_days = 0;

- Automatically removes old logs to prevent storage bloat.

Monitor Disk Space in Real-Time

- Proactively tracks usage to avoid mid-operation crashes.

Why This Worked:

- Compressed backups minimized storage overhead.

- Streamed restore reduced disk usage and I/O strain.

- Prevented downtime from full-disk crashes.

Reduced storage costs by auto-cleaning unused logs.

I/O Bottlenecks During Bulk Restore Operations

Challenge:

- Binary logs from bulk imports filled disk rapidly..

- Restore failed midway due to lack of space.

- Old binlogs weren’t cleaned, bloating disk usage.

Solution: Tuning InnoDB for Optimized Disk I/O During Migration

Adjust Transaction Log Flushing Behavior:

Enable Direct Disk Writes for Data Files:

Bypasses OS cache to reduce double-buffering.

Optimizing InnoDB I/O Performance: innodb_flush_log_at_trx_commit

innodb_flush_log_at_trx_commit is a critical InnoDB parameter that controls how frequently transaction logs are flushed to disk, directly impacting the balance between data durability and write performance in MySQL/InnoDB.

| Setting | Flush Behavior | Durability | Performance | Recommended Use Case |

| 1 (Default) |

Flush logs to disk on every transaction commit |

Highest durability | Slowest | Production environments requiring full ACID compliance |

| 2 | Flush logs to OS cache on commit, disk once per second | Moderate durability (survives OS crash but not power failure) | Faster | Data migrations/restores where speed is prioritized |

| 0 |

Write to OS cache, flush to disk once per second |

Lowest durability |

Faster | Temporary test environments only |

Optimizing InnoDB I/O Performance: innodb_flush_log_trx_commit

innodb_flush_method is a critical parameter that controls how InnoDB interacts with the filesystem for reading and writing data and log files. It has a significant impact on I/O performance during both migrations and normal operations, especially in disk-intensive environments.

| Method | Description | Durability | Performance | Recommended Use |

| fsync (default) |

Uses fsync() for both data and log files |

Highest | Slowest | General purpose, most compatible |

| O_DSYNC | Opens log files with O_SYNC (immediate sync) | High | Moderate | Rarely better than fsync |

| O_DIRECT |

Bypasses OS cache for data files |

High |

Fast for writes | Recommended for most Linux systems |

| O_DIRECT_NO_FSYNC | Like O_DIRECT but skips final fsync | Risky | Fastest | Not recommended for production |

Why This Worked:

- Less I/O load made imports faster.

- Restore finished quicker without data loss.

- Worked well for large, write-heavy tables.

Authentication Plugin Mismatch Broke User Logins

MySQL 8.0 introduced changes in the default authentication plugin, switching from mysql_native_password to caching_sha2_password. This change caused compatibility issues for existing applications

Challenge:

- App logins failed with ERROR 2059: plugin caching_sha2_password not supported.

- Legacy clients couldn’t connect until their config was updated.

- mysql.user schema changes (e.g., new authentication_string column) broke user migration via mysqldump

Solution: Revert to Compatible Authentication Plugin During Migration

Identify Users with Old Plugins in MySQL 5.7

Audit current auth plugins before upgrade.

Pre-Migration: Force Legacy Plugin for Compatibility

Ensures all new users default to legacy plugin.

Post-Migration: Update Authentication Plugin in MySQL 8.0

IDENTIFIED WITH caching_sha2_password

BY ‘secure_pass’;

For services that support it, switch to the modern plugin

Why This Worked:

- Old apps could connect right after migration — no breakage.

- Prevented downtime from unexpected login failures.

- Allowed smooth, controlled transition to the secure caching_sha2_password plugin later.

Conclusion:

“By following these best practices in data engineering and cloud-native database modernization OpsZilla not only eliminates the risks of running an unsupported MySQL version but also unlocked performance improvements and modern features in MySQL 8.0—future-proofing their database infrastructure for continued growth.