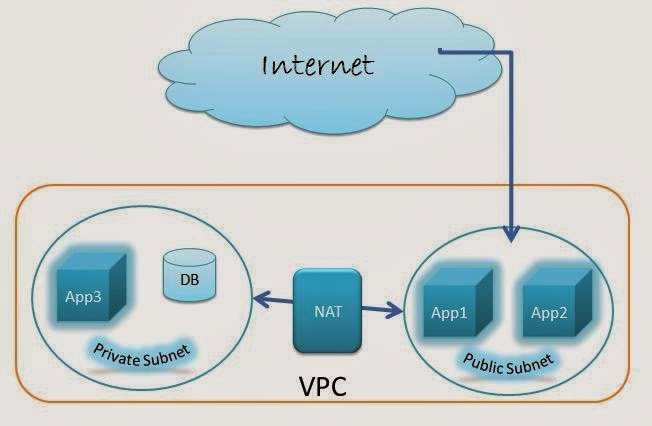

Before directly jumping on how we achieved instance management in an automated fashion, I like to state the problem that we were facing. Our application testing infrastructure is on AWS and it is a multiple components(20+) application distributed among 8-9 Amazon instances. Usually our testing team starts working from 10 am, and continues till 7 pm. Earlier we used to keep our testing infrastructure up for 24 hours, even though we were using it for only 9 hours on weekdays, and not using it at all on weekends. Thus, we were wasting more then 50% of the money that we spent on the AWS infrastructure. The obvious solution to this problem was: we needed an intelligent system that would make sure that our amazon infrastructure was up only during the time when we needed it.

The detailed list of the requirements, and the corresponding things that we did were:

- We should shut down our infrastructure instances when we are not using them.

- There should be a functionality to bring up the infrastructure manually: We created a group of Jenkins jobs, which were scheduled to run at a specific time to start our infrastructure. Also a set of people have execution access to these jobs to start the infrastructure manually, if the need arises.

- We should bring up our infrastructure instances when we need it.

- There should be a functionality to shut down the infrastructure manually: We created a group of Jenkins jobs that were scheduled to run at a specific time to shut down our infrastructure. Also a set of people have execution access on these jobs to shut down the infrastructure manually, if the need arises.

- Automated application/services start on instance start: We made sure that all the applications and services were up and running when the instance was started.

- Automated graceful application/services shut down before instance shut down: We made sure that all the applications and services were gracefully stopped before the instance was shut down, so that there was be no loss of data.

- We also had to make sure that all the applications and services should be started as per defined agreed order.

Once we had the requirements ready, implementing them was simple, as Amazon provides a number of APIs to achieve this. We used AWS CLI, and needed to use just 2 simple commands that AWS CLI provides.

The command to start an instance :

aws ec2 start-instances –instance-ids i-XXXXXXXX

The command to stop an instance :

aws ec2 stop-instances –instance-ids i-XXXXXXXX

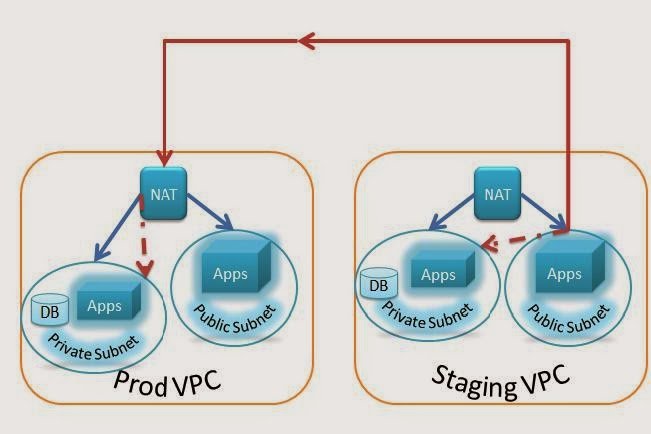

Through above commands you can automate starting and stopping AWS instances, but you might not be doing it the correct way. As you didn’t restrict the AWS CLI allow firing of start-instances and stop-instances commands only, you could use other commands and that could turn out to be a problem area. Another important point to consider is that we should restrict the AWS instances on which above commands could be executed, as these commands could be mistakenly run with the instance id of a production amazon instance id as an argument, creating undesirable circumstances 🙂

In the next blog post I will talk about how to start and stop AWS instances in a correct way.