In today’s fast-paced development environments, security, reliability, and system performance are critical. One of the fundamental practices that help maintain these standards is patching. While often overlooked, patching plays a vital role in the DevOps lifecycle. Continue reading “Patching in DevOps — Part 1: Understanding the Basics”

Tag: Cloud Infra

Checkov a Must Tool for Infra CI

As organizations move more of their operations to the cloud, the need for secure and compliant infrastructure becomes increasingly important. With the rapid pace of cloud adoption, it’s crucial to have a tool that can help you ensure that your cloud infrastructure is configured securely and in compliance with best practices. So in today’s blog, we will be talking about a solution for all these problems which is Checkov.

What is Checkov?

Checkov is a tool that helps developers and operations teams ensure that their infrastructure is secure and compliant with best practices. It does this by automatically scanning infrastructure as code (IaC) and runtime environments for issues that could potentially lead to security vulnerabilities or compliance failures. Checkov works by scanning code written in various IaC languages (such as Terraform, CloudFormation, and ARM templates) and looking for patterns that could indicate security or compliance risks. It can also be integrated into a continuous integration/continuous deployment (CI/CD) pipeline, allowing it to scan code automatically as it is being developed and deployed.

Continue reading “Checkov a Must Tool for Infra CI”Chef Journey

- User Management : User’s creation or deletion on an environment(Dev/QA/Staging/Production) should be managed by chef, along with kind of access on the environment i.e read-only access, root access, or adding a user to some groups.

- VPN Setup : Currently we are using openvpnas for managing secured access to our environment, it is manual right now so the vpn set-up will also be done by chef.

- Apache Setup : We are using apache as web server that sits in front of our app server and also provides SSL.

- Jar App : We have a SOA based set-up in which we have multiple micro java services, so we would be using chef to manage those jar app i.e deploying those jar app’s, starting/stopping those jar app’s.

- Tomcat : Another major component type in our application are web apps that are hosted on tomcat server, the tomcat server is not managed as a service instead we create tomcat as an app user along with tomcat management scripts.

- Mongo : We use replicated mongo as No SQL database in our application.

- Logstash : For managing logs we are using log stash in a clustered set-up where all the log agents publish the logs to a central server and then served by Kibana, so this complete setup should also be managed by chef

- ActiveMQ : We are using ActiveMQ for our queuing purpose

This list is not complete surely, I’ll be adding many more tasks in this list as I proceed in setting up my environment using chef as this is the first time I’ll be doing a set-up using Chef, but this list will be a good starting point.

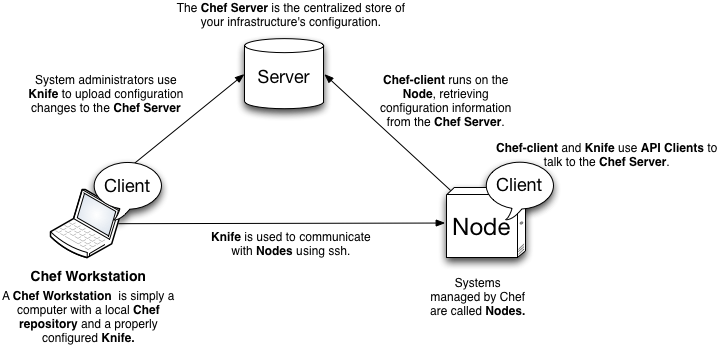

Before jumping into creating the Chef cookbooks, runlists or data bags I’ve to setup the base infrastructure of Chef that is Chef Server to which all chef agents talk to, a chef workstation which would be updating the server with the configurations and a git repo to keep track of all my configuration as shown in the image given below.

In the next blog I’ll talk about how I’ll set-up a chef server. Let me know if you have any inputs for me or suggestion that how I should proceed with the chef set-up.

VPC per envrionvment versus Single VPC for all environments

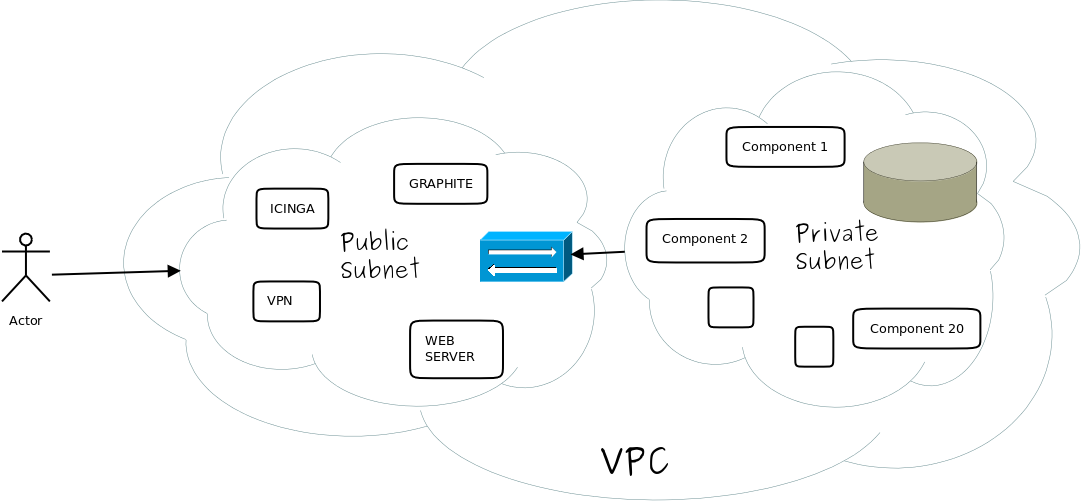

Before jumping right away into the real talk I would like to give a bit of background on how I come up with this blog, I was working with a client in managing his cloud infrastructure where we had 4 environments dev, QA, Pre Production and Production and each environment had close to 20 instances, apart from applications instances there were some admin instances as well such as Icinga for monitoring, logstash for consolidating logs, Graphite Server to view the logs, VPN server to manage access of people.

At this point we got into a discussion that whether the current infrastructure set-up is the right one where we are having a separate VPC per environment or the ideal setup would have been a single VPC and the environments could have been separated by subnet’s i.e a pair of subnet(public private) for each environment

Both approaches had some pros & cons associated with them

Single VPC set-up

Pros:

- You only have a single VPC to manage

- You can consolidate your admin app’s such as Icinga, VPN server.

Cons:

- As you are separating your environments through subnets you need granular access control at your subnet level i.e instances in staging environment should not be allowed to talk to dev environment instances. Similarly you have to control access of people at granular level as well

- Scope of human error is high as all the instances will be on same VPC.

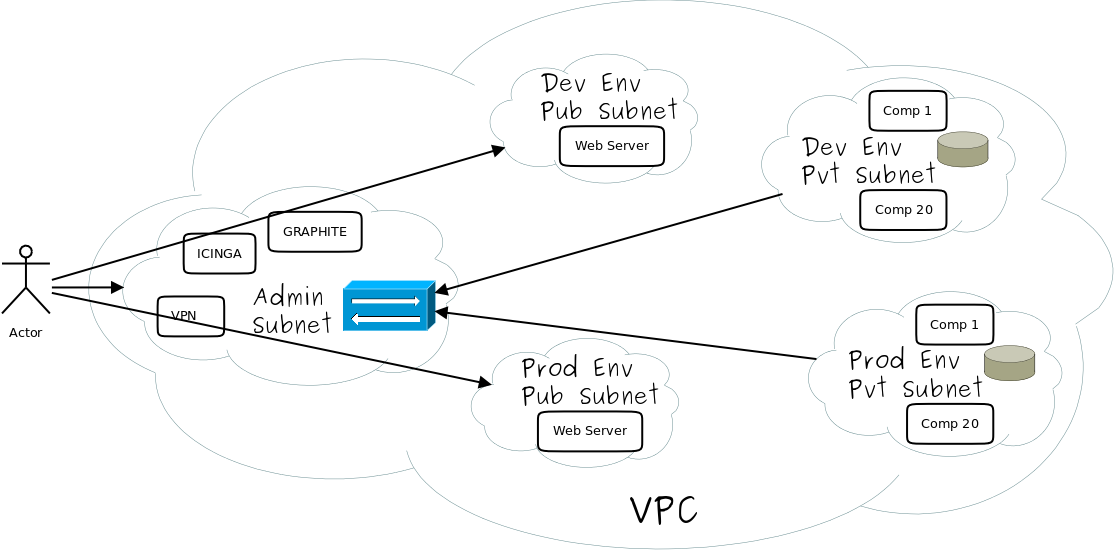

VPC per environment setup

Pros:

- You have a clear separation between your environments due to separate VPC’s.

- You will have finer access control on your environment as the access rules for VPC will effectively be access rules for your environments.

- As an admin it gives you a clear picture of your environments and you have an option to clone you complete environment very easily.

Cons:

- As mentioned in pros of Single VPC setup you are at some financial loss as you would be duplicating admin application’s across environments

In my opinion the decision of choosing a specific set-up largely depends on the scale of your environment if you have a small or even medium sized environment then you can have your infrastructure set-up as “All environments in single VPC”, in case of large set-up I strongly believe that VPC per environment set-up is the way to go.

Let me know your thoughts and also the points in favour or against of both of these approaches.

A wrapper over linode python API bindings

Recently I’ve been working on automating the nodes creation on our Linode infrastructure, in the process I came across the Linode API and it’s bindings. Though they were powerful but lacks at some places i.e:

- In case of Linode CLI, while creating a linode you have to enter the root password so you can’t achieve full automation. Also I was not able to find an option to add private ip to the linode

- In case of Linode API python binding you can’t straight away create a running linode machine.

Recently I’ve launched a new GitHub project, this project is a wrapper over existing python bindings of linode and will try to ease out the working with linode api. Currently using this project you can create a linode with 3 lines of code

from linode import Linode

linode=Linode(‘node_identifier’)

linode.create()

You just need to have a property file,/data/linode/linode.properties:

UBUNTU_DIST=Ubuntu 12.04

KERNEL_LABEL=Latest 64 bit

DATACENTER_LABEL=Dallas

PLAN_ID=1024

ROOT_SSH_KEY=

LINODE_API=