Rocket Science has always fascinated me, but one thing which totally blows my mind is the concept of modules aka. modular rockets. The literal definition of modules states “A modular rocket is a type of multistage rocket which features components that can be interchanged for specific mission requirements.” In simple terms, you can say that the Super Rocket depends upon those Submodules to get the things done.

Similarly is the case in the Software world, where super projects have multiple dependencies on other objects. And if we talk about managing projects Git can’t be ignored, Moreover Git has a concept of Submodules which is slightly inspired by the amazing rocket science of modules.

Hour of Need

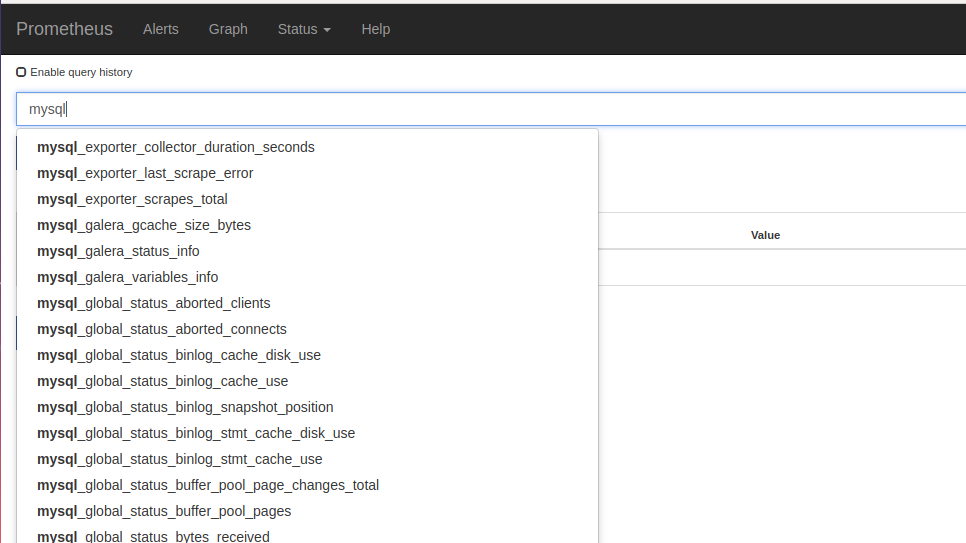

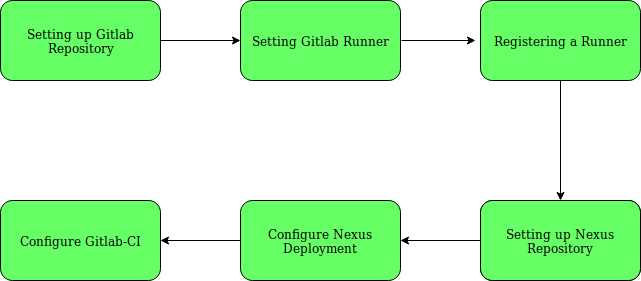

Being a DevOps Specialist we need to do provisioning of the Infrastructure of our clients which is sometimes common for most of the clients. We decided to Automate it, which a DevOps is habitual of. Hence, Opstree Solutions initiated an Internal project named OSM. In which we create Ansible Roles of different opensource software with the contribution of each member of our organization. So that those roles can be used in the provisioning of the client’s infrastructure.

This makes the client projects dependent on our OSM. Which creates a problem statement to manage all dependencies which might get updated over the period. And to do that there is a lot of copy paste, deleting the repository and cloning them again to get the updated version, which is itself a hair-pulling task and obviously not the best practice.

Here comes the git-submodule as a modular rocket to take our Super Rocket to its destination.

Let’s Liftoff with Git-Submodules

In simple terms, a submodule is a git repository inside a Superproject’s git repository, which has its own .git folder which contains all the information that is necessary for your project in version control and all the information about commits, remote repository address etc. It is like an attached repository inside your main repository, which can be used to reuse a code inside it as a “module“.

Let’s get a practical use case of submodules.

We have a client let’s call it “Armstrong” who needs few of our ansible roles of OSM for their provisioning of Infrastructure. Let’s have a look at their git repository below.

$ cd provisioner

$ ls -a

. .. ansible .git inventory jenkins playbooks README.md roles

$ cd roles

$ ls -a

apache java nginx redis tomcat

$ cd java

$ git submodule add -b armstrong git@gitlab.com:oosm/osm_java.git osm_java

Cloning into './provisioner/roles/java/osm_java'...

remote: Enumerating objects: 23, done.

remote: Counting objects: 100% (23/23), done.

remote: Compressing objects: 100% (17/17), done.

remote: Total 23 (delta 3), reused 0 (delta 0)

Receiving objects: 100% (23/23), done.

Resolving deltas: 100% (3/3), done.

With the above command, we are adding a submodule named osm_java whose URL is git@gitlab.com:oosm/osm_java.git and branch is armstrong. The name of the branch is coined armstrong because to keep the configuration of each of our client’s requirement isolated, we created individual branches of OSM’s repositories on the basis of client name.

Now if take a look at our superproject provisioner we can see a file named .gitmodules which has the information regarding the submodules.

$ cd provisioner

$ ls -a

. .. ansible .git .gitmodules inventory jenkins playbooks README.md roles

$ cat .gitmodules

[submodule "roles/java/osm_java"]

path = roles/java/osm_java

url = git@gitlab.com:oosm/osm_java.git

branch = armstrong

Here you can clearly see that a submodule osm_java has been attached to the superproject provisioner.

What if there was no submodule?

If that was a case, then we need to clone the repository from osm and paste it to the provisioner then add & commit it to the provisioner phew….. that would also have worked.

But what if there is some update has been made in the osm_java which have to be used in provisioner, we can not easily sync with the OSM. We would need to delete osm_java, again clone, copy, and paste in the provisioner which sounds clumsy and not a best way to automate the process.

Being a osm_java as a submodule we can easily update that this dependency without messing up the things.

$ git submodule status -d3bf24ff3335d8095e1f6a82b0a0a78a5baa5fda roles/java/osm_java $ git submodule update --remote remote: Enumerating objects: 3, done. remote: Counting objects: 100% (3/3), done. remote: Total 2 (delta 0), reused 2 (delta 0), pack-reused 0 Unpacking objects: 100% (2/2), done. From git@gitlab.com:oosm/osm_java.git 0564d78..04ca88b armstrong -> origin/armstrong Submodule path 'roles/java/osm_java': checked out '04ca88b1561237854f3eb361260c07824c453086'

By using the above update command we have successfully updated the submodule which actually pulled the changes from OSM’s origin armstrong branch.

What have we learned?

Referred links:

Image: google.com

Documentation: https://git-scm.com/docs/gitsubmodules