In the era of cloud computing, safeguarding sensitive data and resources while maintaining a seamless user experience is paramount. Azure Conditional Access emerges as a powerful solution, enabling organizations to fortify their security posture through dynamic access controls. This blog post will delve into the essence of Azure Conditional Access, shedding light on its significance, core components, implementation steps, and real-world benefits.

Understanding Azure Conditional Access

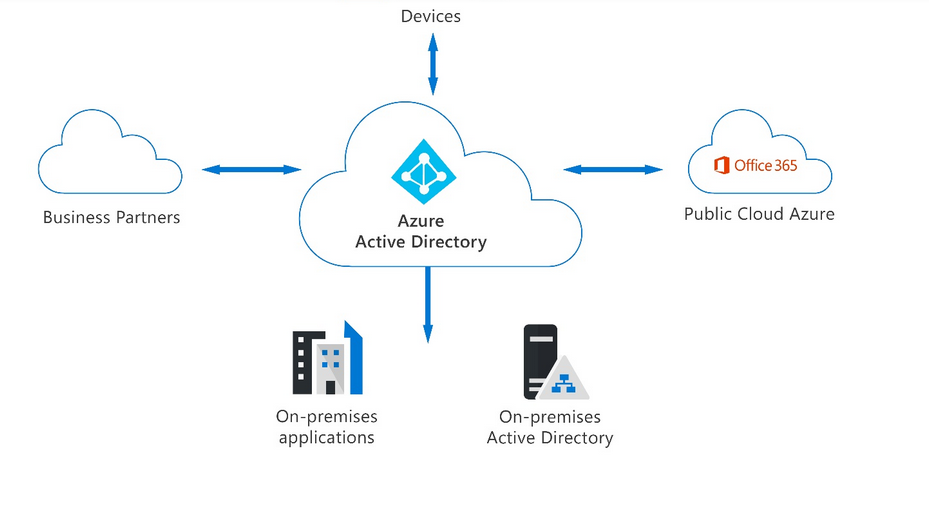

Azure Conditional Access is a pivotal component of Azure Active Directory that empowers organizations to enforce access rules based on specified conditions. These conditions encompass factors such as user identity, device health, location, and sign-in risk. By scrutinizing these elements, Conditional Access policies determine the level of access a user is granted, thereby thwarting unauthorized access attempts.

Continue reading “Azure Conditional Access: Fortifying Your Defense Strategy for Modern Security Challenges”